In a panel of about 30 Elon University faculty members in Belk Library, computer science professor Shannon Duvall displayed instructions for how to remove a peanut butter sandwich from a VCR, written as a biblical verse in the style of the King James Bible. But, it had all been written by a bot.

The bot in question is an artificial intelligence chatbot called ChatGPT, released in November 2022 by San Francisco-based research laboratory OpenAI. It is not the first of its kind — Siri, Grammarly and customer service bots are all examples of chatbot technology. But ChatGPT distinguishes itself with its ability to answer advanced, creative questions and remember the thread of dialogue.

The panel, which took place Feb. 7, provided a space for professors to describe how they might use AI functions in the classroom.

ChatGPT works by scraping massive amounts of text data from across the internet and stringing together words to create human-like responses. Users can ask it to explain what a wormhole is, then ask it to explain it again like it’s talking to a 5-year-old. It can write a story about an epic car chase, then make that story more emotional, then cut it down to exactly 70 words. It can also debug code or write a detailed essay describing 1920s gender roles in “The Great Gatsby.”

With its ability to write 500-word essays in seconds and solve math questions with ease, educational institutions are taking steps to mitigate its use for academic dishonesty. Elon computer science professor Elizabeth von Briesen has already banned its use for assignments.

Von Briesen said her reasoning includes intellectual property concerns and the program’s inability to cite its sources. She said even if ChatGPT is used strictly as a knowledge consultant, there are ethical considerations to using a tool that rehashes text from original work it finds online.

“It may look original. … It may look like something you haven't seen before. But it's not original. It can’t come up with something completely original because it's only learning from the past,” von Briesen said. “It’s wrong to use other people’s work and not give credit for it, because people put time and energy and ingenuity and creativity into that. So what does it mean when we have a tool that gives us a complete essay but never gives us a path back to where it came from?”

There are also concerns over the accuracy of ChatGPT’s answers. A Princeton University computer science professor found that ChatGPT was able to fool humans with convincing responses that included fake information — particularly users who were not well-versed on the topic.

Von Briesen said she worries about false information because the internet is already flooded with inaccurate headlines and news, especially around highly-charged subjects. When students rely on this information, it can continue the echo chamber effect. Like all AI, ChatGPT is trained on large amounts of data.

“There are biases in those datasets, right? When you ask it to write something, it is writing in a certain type of language, from a certain type of norm of the majority, white, American English,” von Briesen said.

As a result, it may generate biased responses, which can be especially dangerous when students do not fact-check or question them. These can include gender, racial and data biases. Because of this, von Briesen is concerned about students not recognizing the limitations and flaws of ChatGPT in their excitement to use it.

Despite these implications, Duvall said adapting to ChatGPT may still be the most logical approach. To professors who feel inclined to ban it, she warned that the use of AI could become increasingly difficult to detect as it becomes more advanced and more integrated into the technology we use. In fact, she said this could mark a new era where professors stop wasting effort to monitor cheating and let the consequences of relying on shortcuts catch up to students in their careers.

“I for one am tired of thinking about students cheating. Maybe this is a watershed moment for

assessment that says, ‘We're not going to do it anymore, we're going to let the workplace do it,’” Duvall said.

More than one Elon professor said they see ChatGPT as a technological advancement they can embrace.

“Writing has never existed outside of technology because it is a technology. And if it's a technology, it has been evolving,” Director of the Writing Across the University Program Paula Rosinski said in the Feb. 7 panel. “We've been here before. This is not new. Writing has changed and we've changed with it.”

Rosinski and Duvall argued professors should adapt to ChatGPT rather than ban it, and they offered ideas for integrating it into assignments. The panel was also led by Julia Bleakney, English professor and director of the Writing Center; Amanda Sturgill, a journalism professor; and Jen Uno, associate director of the Center for the Advancement of Teaching and Learning.

Duvall, Rosinski, Bleakney, Sturgill and Uno suggested students could use ChatGPT for help outlining papers, fixing code, writing citations and generating practice quizzes. Rosinski also proposed it could be used as a tool in the early stages of research, like how one might use Wikipedia — not as a scholarly source, but as a way to gather general information. Duvall encouraged professors to pay attention to how ChatGPT is being used in their industries so they can prepare students for future jobs.

In her presentation, Rosinski compared the apprehension over ChatGPT to the reaction some teachers had to computers and the internet when they were introduced to classrooms decades ago.

“There was a lot of talk, ‘Students’ minds are going to turn to mush because they're just going to copy and paste and use everybody else's words,’” Rosinki said. “But we adjusted. We have journals, we have entire pedagogies, we have computer classrooms. We adjusted.”

Uno said professors can also find personal uses for it, such as drafting emails, writing test questions, refining essay prompts and developing rubrics.

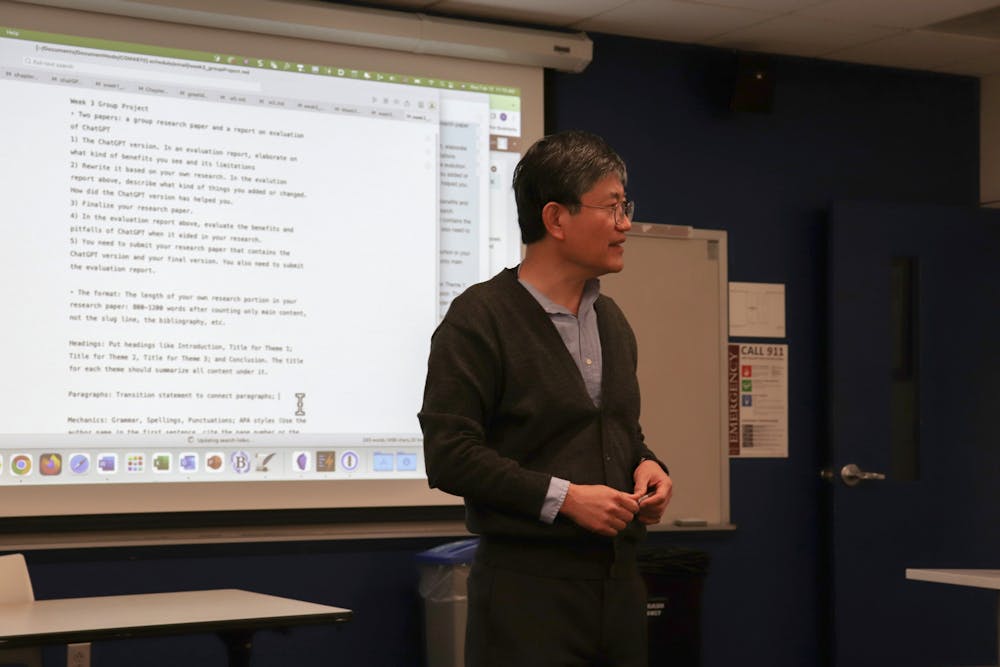

Byung Lee, professor of communication design, has already designed a research assignment centered around ChatGPT for his COM 4970 course, Great Ideas and Research. Lee said ChatGPT may replace the need for students to organize, take notes on and synthesize documents on their own. But, they will need to understand how to refine their requests to get the most accurate results, which he aims to teach in his class.

“Students have to learn how to guide ChatGPT by tweaking requests until they can obtain satisfactory results. Furthermore, students should be able to determine whether the results are satisfactory,” Lee said. “Making a request once or twice and accepting the results and submitting them would not be acceptable.”

Byung Lee, professor of communication design, has already designed a research assignment centered around ChatGPT for his COM 4970 course, Great Ideas and Research. Lee said ChatGPT may replace the need for students to organize, take notes on and synthesize documents on their own. On Feb. 15, 2023, Lee instructed students in his class on how to use ChatGPT in the classroom.

In a group assignment this semester, his students are feeding their research questions to ChatGPT, evaluating the responses, editing their questions based on shortcomings of that response and then repeating this process until they are satisfied with the answer. Then they will conduct their own research using the question ChatGPT helped them make and write a paper that fills in the gaps of the chatbot’s final response. In an evaluation report, they will describe their experience working with ChatGPT, detailing how they refined their research question, what they added to the chatbot’s response and how ChatGPT helped and hindered their research process.

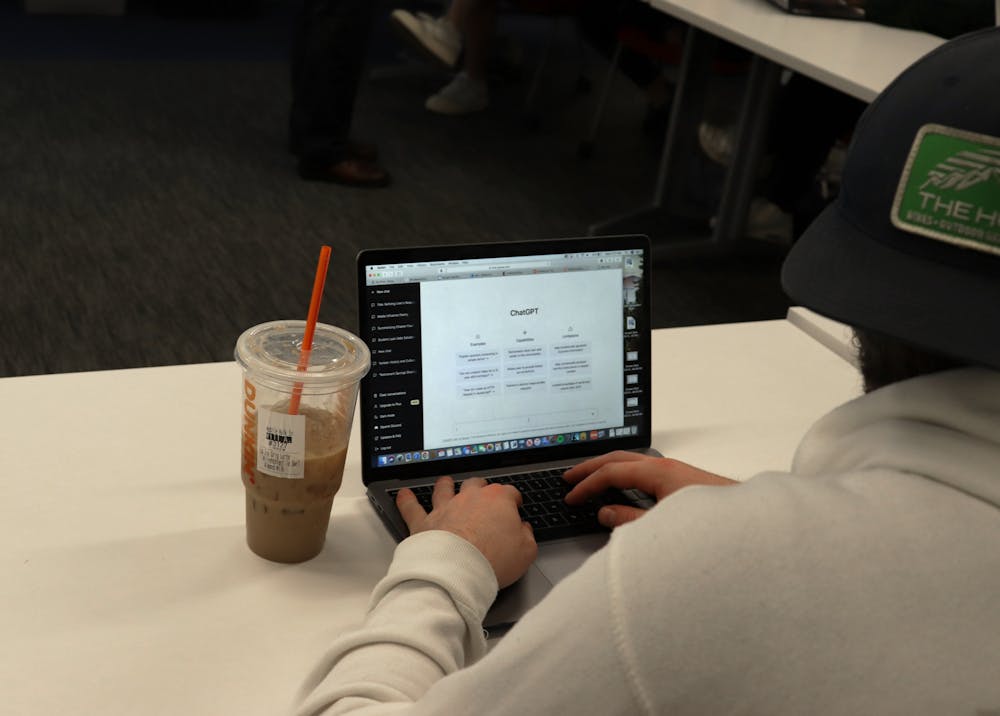

Although not in Lee’s class this semester, senior computer science major Hunter Copeland echoed the idea that students can learn to work with ChatGPT as a tool, rather than let it take over their assignments completely.

“I see it sort of how a calculator would be used for a math class,” Copeland said.

Copeland also said he thinks the use of ChatGPT is going to lead to more in-class assignments and essays, and less take-home work.

As Copeland looks toward the job market, he said he anticipates that ChatGPT or similar AI programs changes the career landscape.

Uno said she sees this as a turning point in which professors may be encouraged to better scaffold their assignments so that students can better manage their workloads without the help of ChatGPT. It could also prompt professors to create more engaging assignments so students will feel motivated to do the work on their own.

“Students are going to go to AI when they don’t like the topic they’re writing about, so how can I engage them in topics, even in topics they may not be interested in?” Uno said.

Von Briesen agreed that rather than trying to catch students using ChatGPT, professors should adjust their assignments in a way that makes it difficult for students to use ChatGPT on them.

“Maybe we need to rethink how we’re evaluating our students. Maybe in a more unique way, like through oral exams,” von Briesen said. “We have to find creative ways to assess whether or not our students have acquired the skills and knowledge that we want them to acquire.”

According to OpenAI’s website, ChatGPT is in a research preview phase, which means the company is offering it free to the public to gather feedback and better identify the program’s strengths and weaknesses. For now, all users need to do to try the program is create an account with their email and phone number. But, users will likely have to pay for access in the future.

ChatGPT and other advanced AI technologies could pose sweeping changes to education, but Duvall is optimistic.

“It might seem scary now,” Duvall said. “But we'll adjust.”